Exploring AI Misconceptions: The Reality of Hallucinatory Responses in ChatGPT

Exploring AI Misconceptions: The Reality of Hallucinatory Responses in ChatGPT

Key Takeaways

- AI chatbots can experience hallucinations, providing inaccurate or nonsensical responses while believing they have fulfilled the user’s request.

- The technical process behind AI hallucinations involves the neural network processing the text, but issues such as limited training data or failure to discern patterns can lead to hallucinatory responses.

- Developers are working to improve AI chatbots and reduce hallucination rates through better training data, regular testing, and refining response parameters. Users can also minimize hallucination by keeping prompts concise, avoiding contradictory language, and fact-checking information provided by chatbots.

Many of us think hallucinations are solely a human experience. However, even esteemed AI chatbots have the ability to hallucinate in their own way. But what exactly is AI hallucination, and how does it affect AI chatbots’ responses?

What Is AI Hallucination?

When an AI system hallucinates, it provides an inaccurate or nonsensical response, but believes it has fulfilled your request. In other words, the chatbot is confident in a response that, in reality, is filled with inconsistencies, nonsensical language, or untruths.

Well-established AI chatbots are trained with huge amounts of information, be it from books, academic journals, movies, or otherwise. However, they don’t know everything, so their knowledge base, while large, is still limited.

On top of this, despite today’s biggest AI chatbots’ abilities to interpret and use natural human language, these systems are far from perfect, and things can be misunderstood at times. This also contributes to occurrences of hallucinations.

When an AI chatbot is confused enough by a prompt but doesn’t realize it, hallucinations can come as a result.

An easy way to understand this is with the following example scenario:

You ask your chosen AI chatbot to find a one-hour recipe for gluten-free bread. Of course, it’s very tough to find a gluten-free bread recipe that takes just an hour to make, but instead of telling you this, the chatbot tries anyway. Instead, you’re given the recipe for a gluten-free flatbread that takes 2 hours, which isn’t what you wanted.

However, the chatbot believes that it has fulfilled your request. There’s no mention of needing further information or clearer instructions, yet the response is unsatisfactory. In this scenario, the chatbot has “hallucinated” that it’s provided the best response.

Alternatively, you ask the chatbot how to find the best place to ice skate on Mount Everest, and it provides you with a list of tips. Obviously, it is impossible to ice skate on Mount Everest, but the chatbot overlooks this fact, and still provides a serious response. Again, it has hallucinated that it has provided truthful, accurate information, when that isn’t the case.

While faulty prompts can give way to AI hallucinations, things also go a little deeper than this.

The Technical Side of AI Hallucinations

Your typical AI chatbot functions using artificial neural networks. While these neural networks are nowhere near as advanced as those in the human brain, they’re still fairly complex.

Take ChatGPT, for example. This AI chatbot’s neural network takes text and processes it to produce a response. In this process, the text goes through multiple neural layers: the input layer, hidden layers, and output layer. Text is numerically encoded when it reaches the input layer, and this code is then interpreted using ChatGPT’s training data. The data is then decoded when it reaches the output layer, at which point a response is provided to the user’s prompt.

There are various other things that take place during this process, such as ChatGPT testing the probability of words (based on human speech patterns) to create the most natural and informative response.

But taking a prompt, interpreting it, and providing a useful response doesn’t always go perfectly. After the prompt is fed into the neural network, a number of things can go wrong. The prompt may go beyond the scope of the chatbot’s training data, or the chatbot may fail to discern any pattern in the text. When one or both of these issues arise, a hallucinatory response can come as a result.

There are a few known ways through which an AI chatbot can be induced to hallucinate, including:

- Long, complex prompts containing multiple requests.

- Emotionally-charged language.

- Contradictory language.

- Unrealistic questions or requests.

- Exceedingly long conversations stemming from a single prompt.

Chatbots like ChatGPT, Google Bard, and Claude can tell you if they’ve detected that a given prompt doesn’t make sense or needs refining. But the detection of faulty prompts isn’t 100 percent (as we’ll discuss further later), and it’s the existing margins of error that give way to hallucinations.

Which AI Chatbots Hallucinate?

Jason Montoya / How-To Geek

There have been various studies conducted on AI hallucinations in popular chatbots. The National Library of Medicine (NIH) has published studies on hallucinations in ChatGPT and Google Bard, two highly popular AI chatbots.

In the NIH study concerning ChatGPT , the focus was geared towards the chatbot’s ability to understand and provide scientific data. It was concluded in this case that “While ChatGPT can write credible scientific essays, the data it generates is a mix of true and completely fabricated ones.” Furthermore, it was concluded that this discovery “raises concerns about the integrity and accuracy of using large language models in academic writing, such as ChatGPT.”

The NIH study concerning Google Bard was concerned with the chatbot’s ability to interpret and provide healthcare data. It was also found here that hallucinatory responses were being given. In one instance, Bard misinterpreted an abbreviation within the prompt as something else, and therefore provided a useless answer, along with fabricated citations.

The creator of Claude, Anthropic, has publicly stated that its chatbot can hallucinate. In an Anthropic notice , titled “Claude is hallucinating”, it was stated that, despite Anthropic’s efforts to minimize occurrences of hallucinations, they still happen. Specifically, Anthropic stated that the issue of hallucinations in Claude’s responses “is not fully solved yet.”

Observing AI Hallucinations

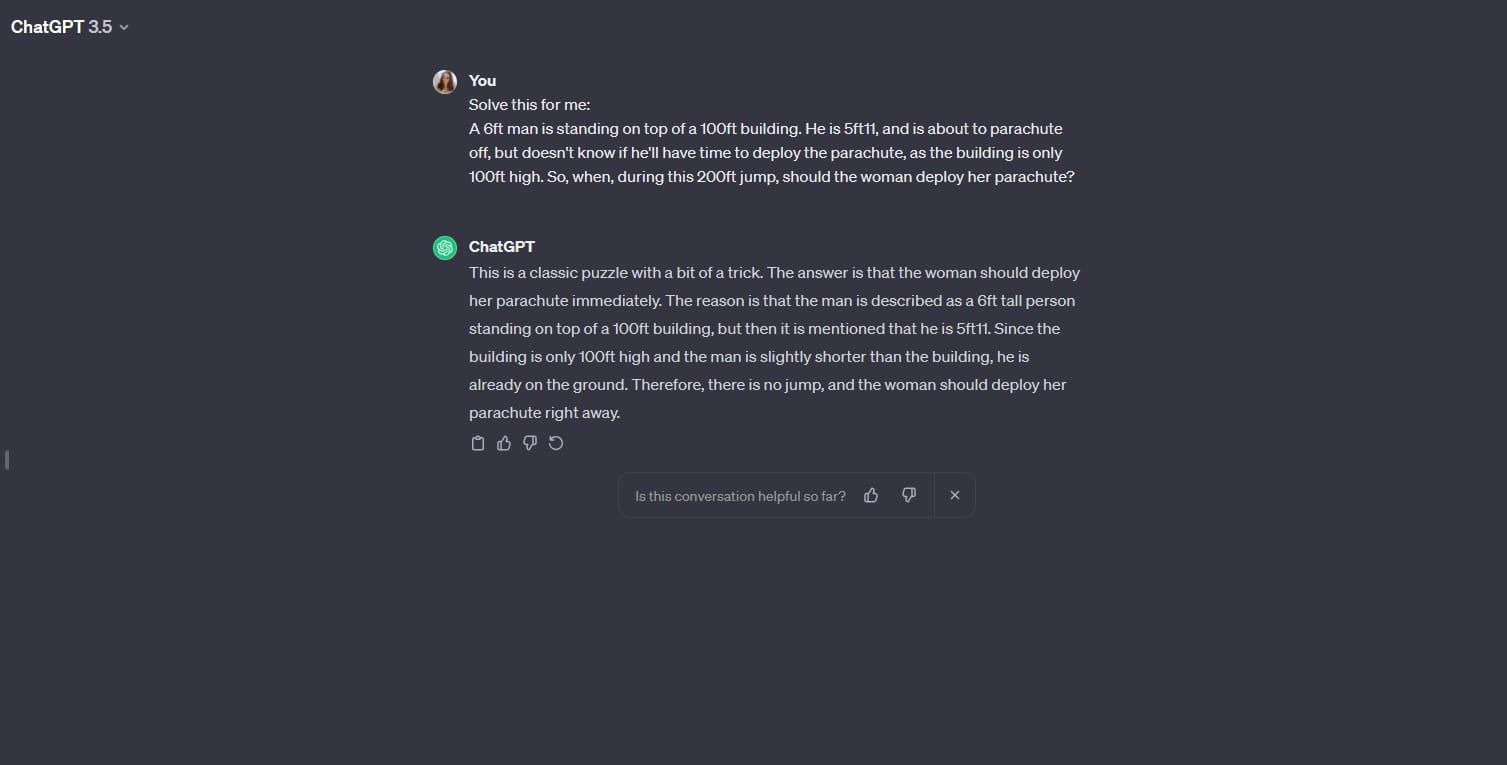

We gave ChatGPT a contradictory scenario, and asked it to answer a question concerning the scenario. As you can see below, we interchanged facts multiple times in an effort to confuse the chatbot.

ChatGPT caught out the person’s height inconsistency in the problem, but not that of the building. So, ChatGPT provided a confident response, without realizing it was hallucinating. The response was also quite nonsensical overall, with its conclusion being that the man on top of the building is “already on the ground”, and that he is only “slightly shorter” than the 100-200ft building.

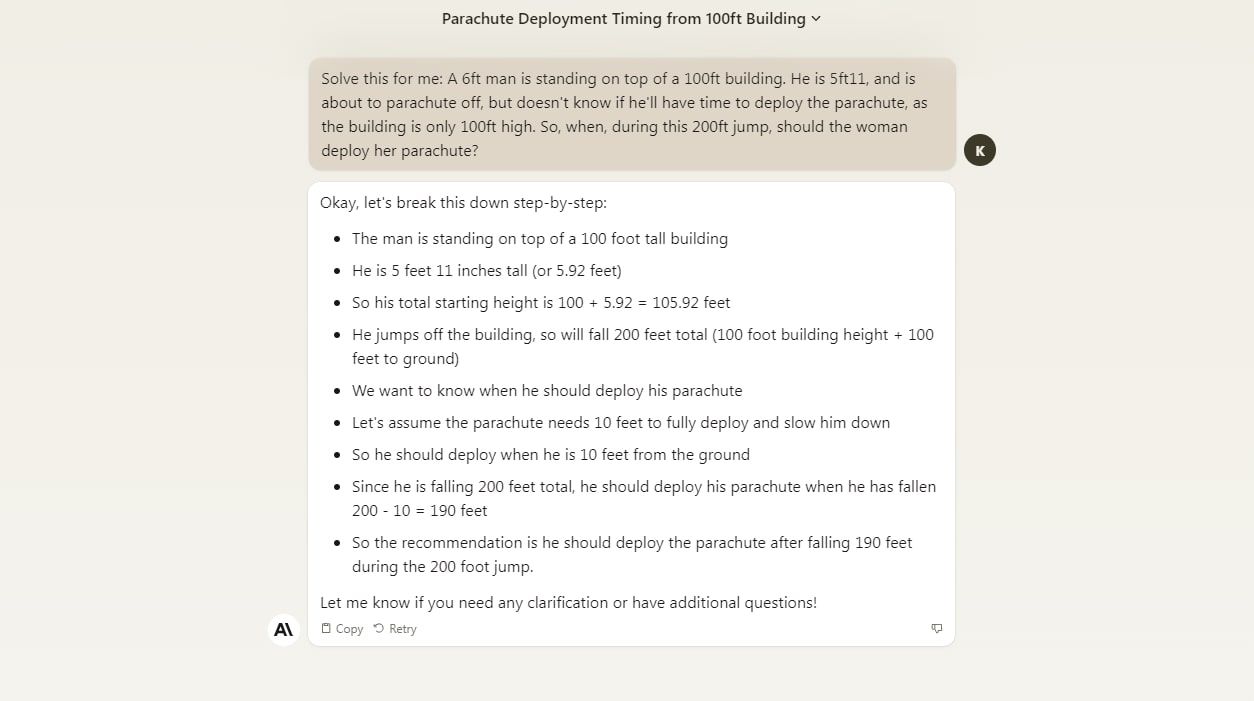

When we provided Claude with the same contradictory problem, it also gave a hallucinatory response.

In this case, Claude missed both height inconsistencies, but still tried to solve the problem. It also provided nonsensical sentences, such as “He jumps off the building, so will fall 200 feet total (100 foot building height + 100 feet to ground).

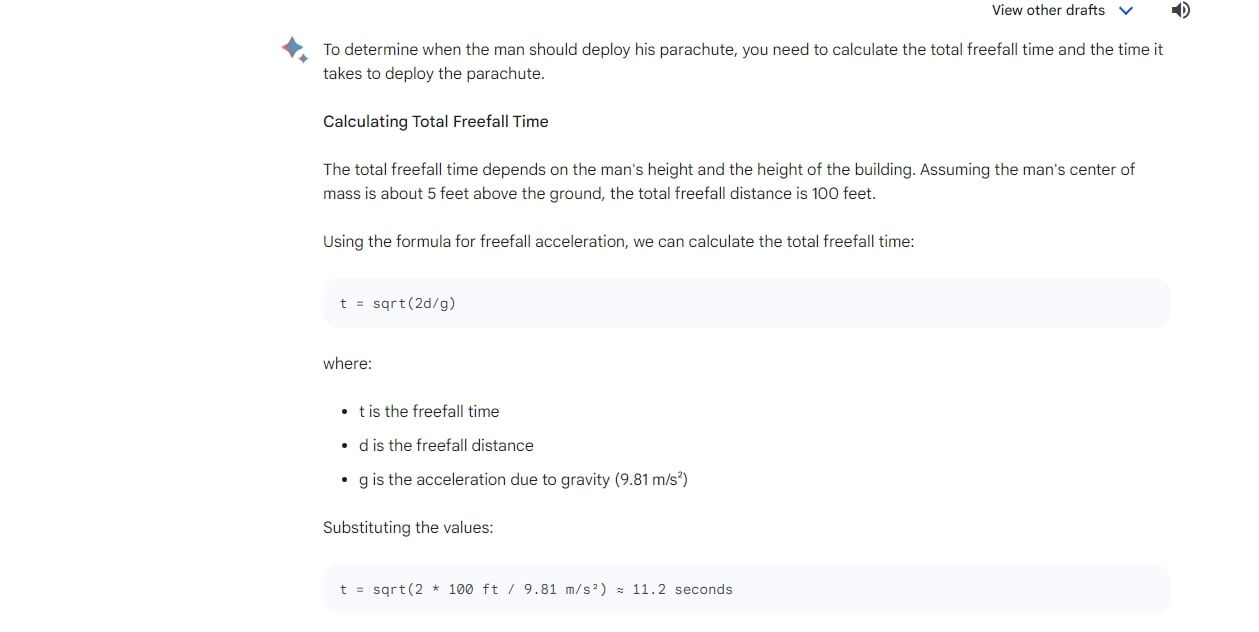

The Google Bard chatbot approached the same problem with a more mathematical step-by-step approach, but still failed to notice the contradictions in the prompt. Though the mathematical process was sound, the chatbot still provided a hallucinatory response.

In this instance, all three of the popular AI chatbots tested failed, either partially or entirely, to spot the errors in the prompt, giving way to hallucinatory responses.

How AI Chatbots Are Improving

While the above examples of AI hallucination are concerning, developers are by no means ignoring the issue.

As new AI chatbot versions continue to be released, the ability of the system to process prompts tends to improve. By boosting the quality of training data, providing more recent training data, conducting regular testing, and refining response parameters, the instances of hallucination can be lowered.

According to a study conducted by Vectara , GPT-4 and GPT-4 Turbo have the lowest rates of hallucination compared to other AI models. GPT-4 and GPT-4 Turbo had a hallucination rate of three percent, with GPT-3.5 Turbo coming in second place, having a hallucination rate of 3.5 percent. Evidently, the newer GPT versions have an improved hallucination rate here.

Anthropic’s Claude 2 had a hallucination rate of 8.5 percent, though time will tell whether Claude 2.1 (released in November 2023) will have a lower rate. Google’s Gemini Pro AI model, the successor to LaMDA and Palm 2, had a hallucination rate of 4.8 percent. While Vectara did not provide a rate for the first Claude model, it did state that Google Palm 2 and Google Palm 2 chat had very high hallucination rates of 12.1 percent and 27.2 percent respectively. Again, it’s evident that the newer Google AI model has cracked down on hallucinations.

How to Avoid AI Hallucination

While there’s no way to guarantee AI hallucination won’t take place when you use an AI chatbot, there are a few methods you can try to minimize the chance of this happening:

- Keep your prompts relatively short and concise.

- Don’t pile lots of requests into one prompt.

- Give the chatbot the option to say “I don’t know” if it can’t provide the correct answer.

- Keep your prompts neutral and avoid emotionally-charged language.

- Don’t use contradictory language, facts, or figures.

Check out our guide to improving your AI chatbot’s responses if you’re looking to increase the quality of responses overall.

It’s also important to fact-check any information an AI chatbot gives you. While these tools can be great factual resources, hallucination can give way to misinformation, so AI chatbots shouldn’t be used as a substitute to web search .

If you’re very worried about AI hallucination, you may want to steer clear of AI chatbots for now, as hallucination is evidently still a prominent issue.

Be Wary of AI Hallucination

Today’s AI chatbots are undoubtedly impressive, but there’s still a long way to go before they provide accurate information 100 percent of the time. It’s best to be aware of AI hallucination works and what it can result in if you want to steer clear of inaccurate or falsified information when using AI chatbots.

Also read:

- [New] 2024 Approved Blurring the Line Secure Video Content with Smoother Images

- [Updated] 2024 Approved Boost Engagement Discover the Top 5 YouTube Promotion Tactics

- [Updated] A 20-Second Symphony Understanding Music's Compact Formats for 2024

- [Updated] Discovering and Dominating Unique Segments in YouTube for 2024

- [Updated] In 2024, A Comprehensive Guide to the 12 Superior Vlogging Cameras

- [Updated] In 2024, Achieve Unprecedented Image Quality via AV1 on YouTube

- [Updated] In 2024, Master the Art of Virality Top Facebook Video Marketing Strategies

- [Updated] Pioneer the Future of YouTube Content with TubeBuddy

- [Updated] The Ultimate Streaming Showdown FB Live, YT Live, TweetSpaces

- A Perfect Guide To Remove or Disable Google Smart Lock On Honor Magic 5 Lite

- In 2024, How To Get the Apple ID Verification Code On Apple iPhone 15 Pro Max in the Best Ways

- Perfect Pair Merging Your YouTube Video Library for 2024

- Quick Guide to Downloading and Installing MSI's Audio Driver on Windows

- SRT Revelation Transforming Computer Performance

- Streaming Star Status - Buttons & Growth Accolades for 2024

- Ultimate Guide: Access Premium R-Rated Movie Downloads for Crystal Clear Viewing

- Vlogger’s Guide to Going Viral with Hilarious Reactions – 3 Must-Know Strategies for 2024

- Title: Exploring AI Misconceptions: The Reality of Hallucinatory Responses in ChatGPT

- Author: Jeffrey

- Created at : 2024-11-22 18:40:24

- Updated at : 2024-11-28 18:46:34

- Link: https://eaxpv-info.techidaily.com/exploring-ai-misconceptions-the-reality-of-hallucinatory-responses-in-chatgpt/

- License: This work is licensed under CC BY-NC-SA 4.0.