Injury-in-Fact

Injury-in-Fact

Quick Links

- How to Tell If ChatGPT Wrote That Article

- Can You Use AI to Detect AI-Generated Text?

- Tools to Check If An Article Was Written By ChatGPT

- Train Your Brain To Catch AI

Key Takeaways

You can tell a ChatGPT-written article by its simple, repetitive structure and its tendency to make logical and factual errors. Some tools are available for automatically detecting AI-generated text, but they are prone to false positives.

AI technology is changing what we see online and how we interact with the world. From a Midjourney photo of the Pope in a puffer coat to language learning models like ChatGPT, artificial intelligence is working its way into our lives.

The more sinister uses of AI tech, like a political disinformation campaign blasting out fake articles, mean we need to educate ourselves enough to spot the fakes. So how can you tell if an article is actually AI generated text?

How to Tell If ChatGPT Wrote That Article

Multiple methods and tools currently exist to help determine whether the article you’re reading was written by a robot. Not all of them are 100% reliable, and they can deliver false positives, but they do offer a starting point.

One big marker of human-written text, at least for now, is randomness. While people will write using different styles and slang and often make typos, AI language models very rarely make those kinds of mistakes. According to MIT Technology Review , “human-written text is riddled with typos and is incredibly variable,” while AI generated text models like ChatGPT are much better at creating typo-less text. Of course, a good copy editor will have the same effect, so you have to watch for more than just correct spelling.

Another indicator is punctuation patterns. Humans will use punctuation more randomly than an AI model might. AI generated text also usually contains more connector words like “the,” “it,” or “is” instead of larger more rarely used words because large language models operate by predicting what word will is most likely to come next, not coming up with something that would sound good the way a human might.

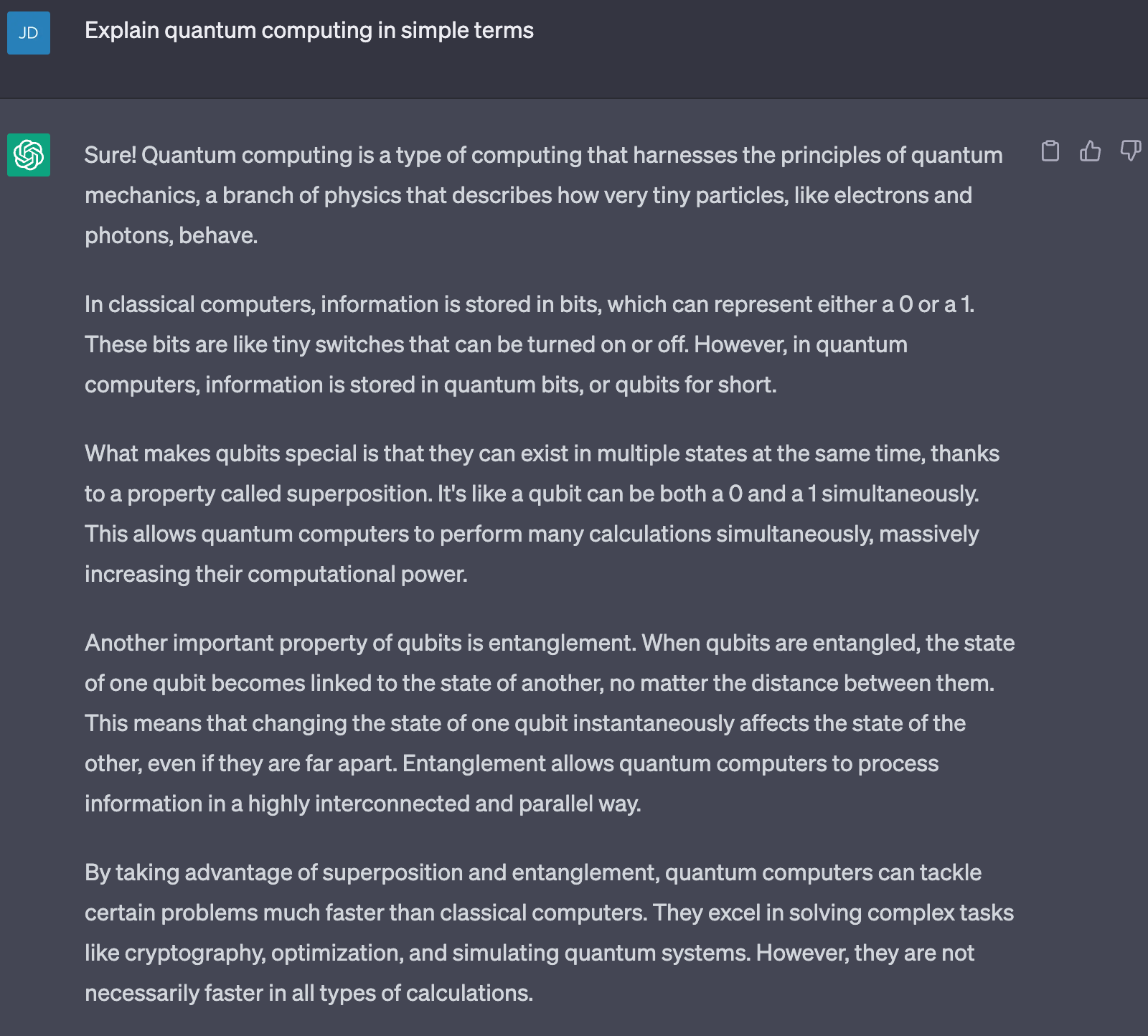

This is visible in ChatGPT’s response to one of the stock questions on OpenAI’s website. When asked, “Can you explain quantum computing in simple terms,” you get sentences like: “What makes qubits special is that they can exist in multiple states at the same time, thanks to a property called superposition. It’s like a qubit can be both a 0 and a 1 simultaneously. “

Short, simple connecting words are regularly used, the sentences are all a similar length, and paragraphs all follow a similar structure. The end result is writing that sounds and feels a bit robotic.

Can You Use AI to Detect AI-Generated Text?

Large language models themselves can be trained to spot AI generated writing. Training the system on two sets of text — one written by AI and the other written by people — can theoretically teach the model to recognize and detect AI writing like ChatGPT.

Researchers are also working on watermarking methods to detect AI articles and text. Tom Goldstein, who teaches computer science at the University of Maryland, is working on a way to build watermarks into AI language models in the hope that it can help detect machine-generated writing even if it’s good enough to mimic human randomness.

Invisible to the naked eye, the watermark would be detectable by an algorithm, which would indicate it as either human or AI generated depending on how often it adhered to or broke the watermarking rules. Unfortunately, this method hasn’t tested so well on later models of ChatGPT.

Tools to Check If An Article Was Written By ChatGPT

You can find multiple copy-and-paste tools online to help you check whether an article is AI generated. Many of them use language models to scan the text, including ChatGPT-4 itself.

Undetectable AI , for example, markets itself as a tool to make your AI writing indistinguishable from a human’s. Copy and paste the text into its window and the program checks it against results from other AI detection tools like GPTZero to assign it a likelihood score — it basically checks whether eight other AI detectors would think your text was written by a robot.

Originality is another tool, geared toward large publishers and content producers. It claims to be more accurate than others on the market and uses ChatGPT-4 to help detect text written by AI. Other popular checking tools include:

Most of these tools give you a percentage value, like 96% human and 4% AI, to determine how likely it is that the text was written by a human. If the score is 40-50% AI or higher, it’s likely the piece was AI-generated.

While developers are working to make these tools better at detecting AI generated text, none of them are totally accurate and can falsely flag human content as AI generated. There’s also concern that since large language models like GPT-4 are improving so quickly, detection models are constantly playing catchup.

Related: Can ChatGPT Write Essays: Is Using AI to Write Essays a Good Idea?

Train Your Brain To Catch AI

In addition to using tools, you can train yourself to catch AI generated content. It takes practice, but over time you can get better at it.

Daphne Ippolito, a senior research scientist at Google’s AI division Google Brain, made a game called Real Or Fake Text (ROFT) that can help you separate human sentences from robotic ones by gradually training you to notice when a sentence doesn’t quite look right.

One common marker of AI text, according to Ippolito, is nonsensical statements like “it takes two hours to make a cup of coffee.” Ippolito’s game is largely focused on helping people detect those kinds of errors. In fact, there have been multiple instances of an AI writing program stating inaccurate facts with total confidence — you probably shouldn’t ask it to do your math assignment , either, as it doesn’t seem to handle numerical calculations very well.

Right now, these are the best detection methods we have to catch text written by an AI program. Language models are getting better at a speed that renders current detection methods outdated pretty quickly, however, leaving us in, as Melissa Heikkilä writes for MIT Technology Review, an arms race.

Related: How to Fact-Check ChatGPT With Bing AI Chat

Also read:

- [New] 2024 Approved How to Stream to YouTube, Facebook, Twitch and Over 30 Platforms

- [New] In 2024, Digital Dynamo Dames The Next Generation of YouTube's Powerhouses

- [New] In 2024, Live Action, #9 The Ultimate Guide

- [New] In 2024, The Future of Finance 15 Must-Watch Market Videos

- [New] In 2024, Turn FB HD Videos Into High-Quality MP4 – Free Online Method Unveiled

- [New] In 2024, Ultimate Screen Capture Review OBS vs Fraps

- [Updated] 2024 Approved Elevating Your YouTube Comments with Emoji Skills

- [Updated] 2024 Approved Searching Comments on YouTube

- [Updated] In 2024, FREE Online Services for YouTube Images Extraction

- [Updated] Stepwise Integration Technique for YouTube Playlists on Web

- 2024 Approved Wealthy Web Showrunners

- Authentication Error Occurred on Realme GT Neo 5 SE? Here Are 10 Proven Fixes | Dr.fone

- How to Mirror Your Tecno Phantom V Flip Screen to PC with Chromecast | Dr.fone

- In 2024, How to Screen Share on Apple iPhone 13 Pro Max? | Dr.fone

- Pathfinding Your Way From AdSense to Accounts Aplenty for 2024

- Snap It All Navigating the World of Free TikTok BGs for 2024

- Transforming Social Interactions on Xbox Mastering Zoom

- Title: Injury-in-Fact

- Author: Jeffrey

- Created at : 2024-11-24 17:10:21

- Updated at : 2024-11-28 17:02:59

- Link: https://eaxpv-info.techidaily.com/injury-in-fact/

- License: This work is licensed under CC BY-NC-SA 4.0.