Limitations of ChatGPT Compared to Traditional Web Search Techniques

Limitations of ChatGPT Compared to Traditional Web Search Techniques

Do you use ChatGPT or similar AI tools in place of a good old-fashioned web search? It can be tempting to pass your queries off to a chatbot, but there are some good reasons to be cautious when doing so.

Can I Trust What ChatGPT Is Telling Me?

ChatGPT is more accessible than ever before. Not only can you create a free OpenAI account and use the GPT-3.5 model free of charge in a browser, but there are now countless mobile apps and other services that integrate with the service. You can even use a Shortcut to access ChatGPT via Siri on your iPhone or Apple Watch.

ChatGPT isn’t the only game in town either. Microsoft’s Bing AI uses the more advanced GPT-4 model (also used by ChatGPT Plus), and Google Bard is finally getting off the ground . It’s easy to ask an AI chatbot to summarize an argument, check a fact, or solve a problem for you. So easy that you might prefer it over performing an old-fashioned web search.

But chatbots are not necessarily bastions of the truth. They have their place, and they’re undoubtedly one of the most interesting things you can play with on the internet right now. However, they’ve yet to earn our implicit trust. We know this because the internet itself isn’t trustworthy.

The internet, or vast swathes of it, was used alongside books and other sources to train these models. The phrase “garbage in, garbage out” sums up the problem nicely: if you use bad information to train a large language model, it’s going to use that bad information to make bad judgments.

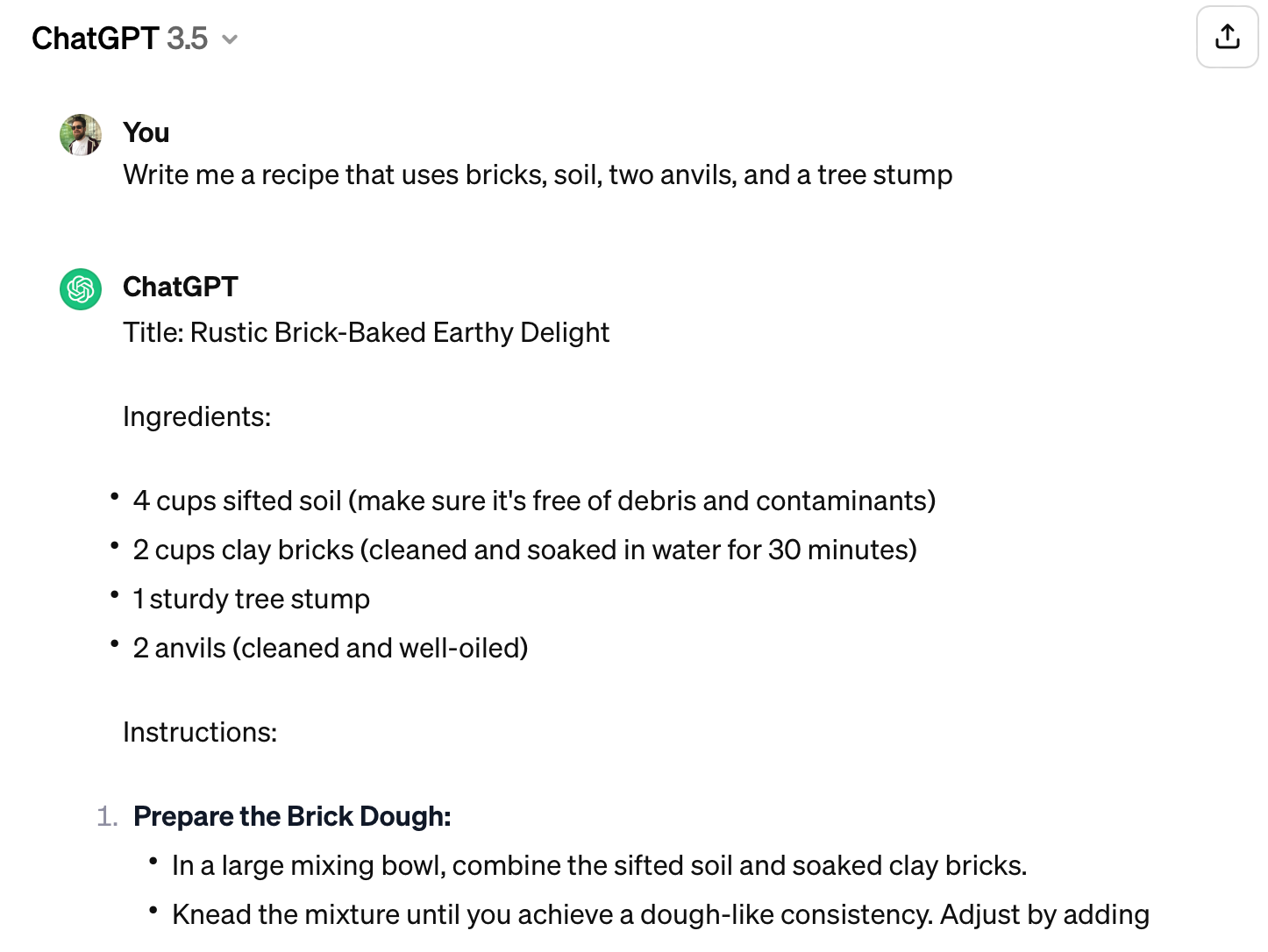

There’s plenty of evidence of this where ChatGPT gets something hilariously wrong. What’s not so funny is how confident chatbots can seem when they’re wide of the mark. Since these kinds of tools aren’t calculators (they’re more akin to word prediction machines), both ChatGPT and Bing AI have made embarrassing math mistakes in the past.

In the case of ChatGPT-3.5, the model that powers the free version of ChatGPT on the OpenAI website, information is likely to be outdated. When we asked ChatGPT in November 2023 about the cutoff date for its training data, we were told that knowledge was current as of January 2022.

The chatbot wasn’t aware of the existence of GPT-4 (for which there is a toggle at the top of the page), whether Nintendo Switch smash-hit The Legend of Zelda: Tears of the Kingdom is worth playing, or who won this year’s sporting trophies like the Super Bowl or FIFA Women’s World Cup.

Sometimes, chatbots make mistakes because the input you have provided simply isn’t good enough . Perhaps your prompts are too short, not specific enough, or you haven’t “primed” the model with enough information by having a back-and-forth beforehand.

The Importance of Sourcing Your Information

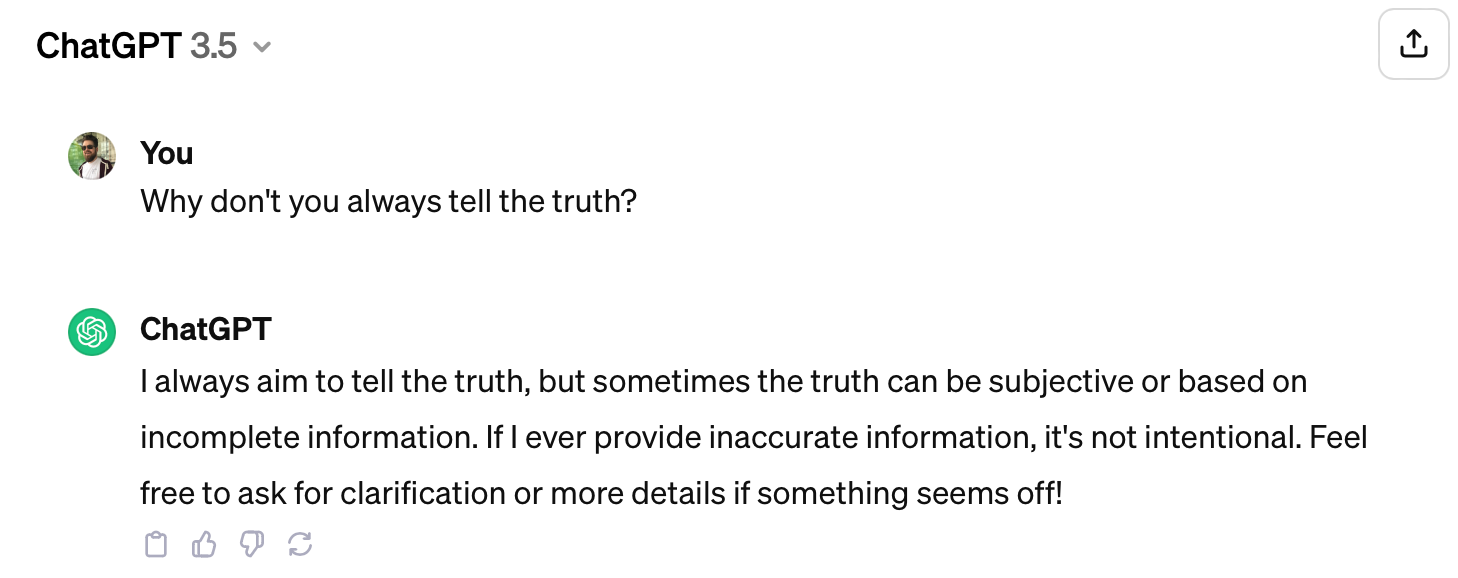

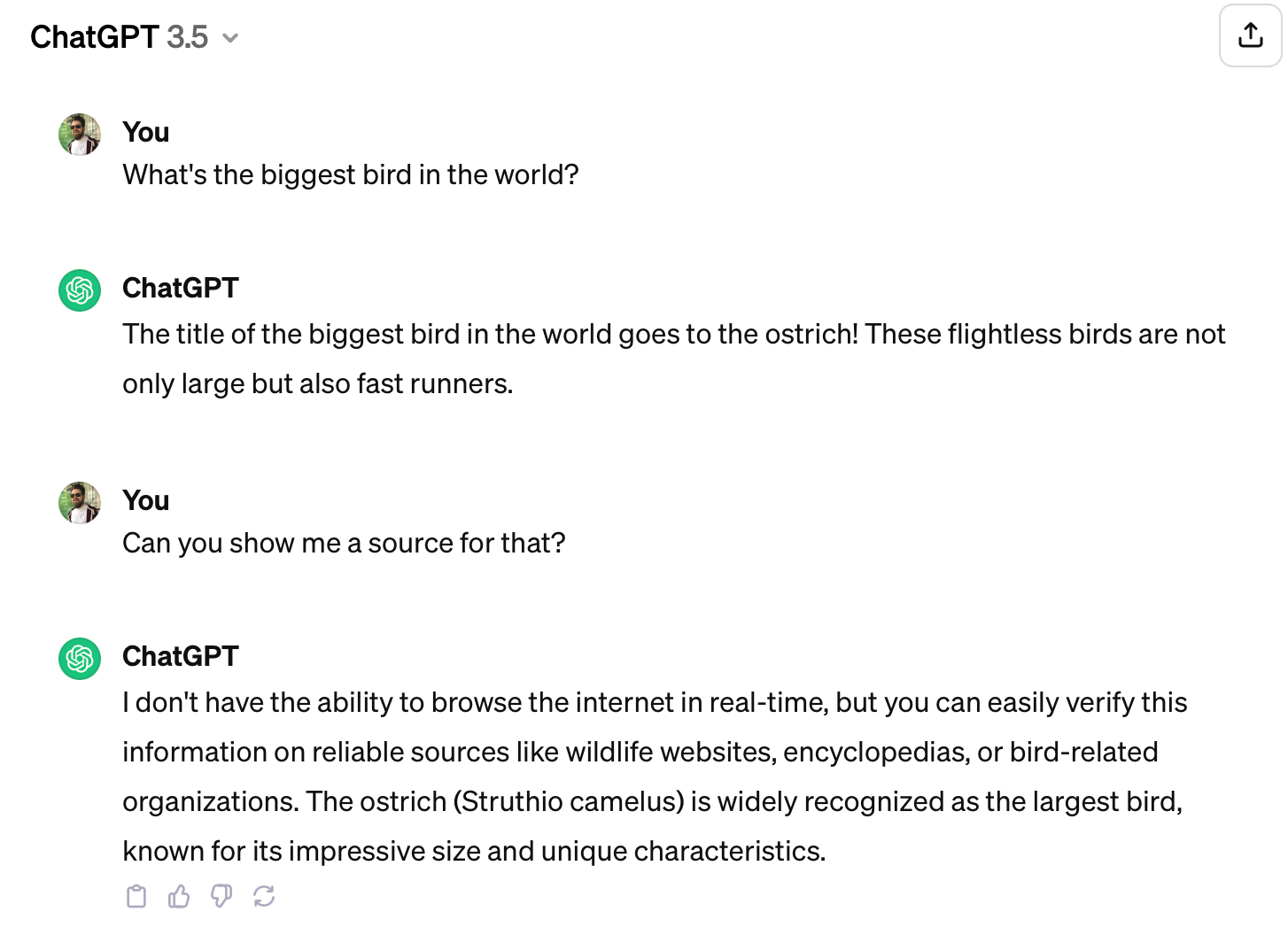

Sourcing quality information is important if you’re trying to make a sound decision. ChatGPT-3.5 sidesteps the process of showing a source for its claims in favor of a more conversational format. This feels more like talking to a person than interfacing with a search engine.

Being aware of the source of your information is vital if you want to trust what you’re reading. For example, the article you’re reading right now is sourced from How-To Geek. You might have some idea of the lengthy process an article goes through before it is published on a website like this. You probably understand that writers and editors are paid for their expertise and time, and that the end goal is to produce trustworthy content and stand out as an authority in the field.

I had the idea for this article after I saw a Reddit thread in which a user asked a simple question related to weight loss. The user had asked ChatGPT a question and been given an answer they were unsure of. The unsourced information was wrong, falling for a common but incorrect belief that is often spread on social media. We’ve chosen not to link to this thread here due to the sensitive nature of the topic.

This is a dangerous intersection of bad data and health advice. This person was making decisions relating to their health that were predicated on bad data. ChatGPT didn’t cite a source that could have hinted to the individual whether it was trustworthy or not.

At some point, you were probably urged not to blindly trust everything that you read on the internet. You may have been told not to trust Wikipedia, since “anyone can edit the page” (despite Wikipedia clearly displaying its sources). Never Google a health complaint if you don’t want Dr Google to tell you the very worst news.

Be aware that ChatGPT and similar tools may be trained on examples of this untrustworthy information. The fact that anyone can publish anything on the internet isn’t inherently a bad thing, but it’s something you have to be mindful of. Chatbots that obscure sources make this very difficult to track.

In the example above, I asked ChatGPT to name the largest bird in the world. The response is not wrong, but when pushed for a source the chatbot comes up short. It my be common knowledge that the ostrich is the largest bird in the world, but what about other topics? Can you implicitly trust a chat bot that simply can’t tell you how it reached its conclusion, or point you to further reading to verify the claim?

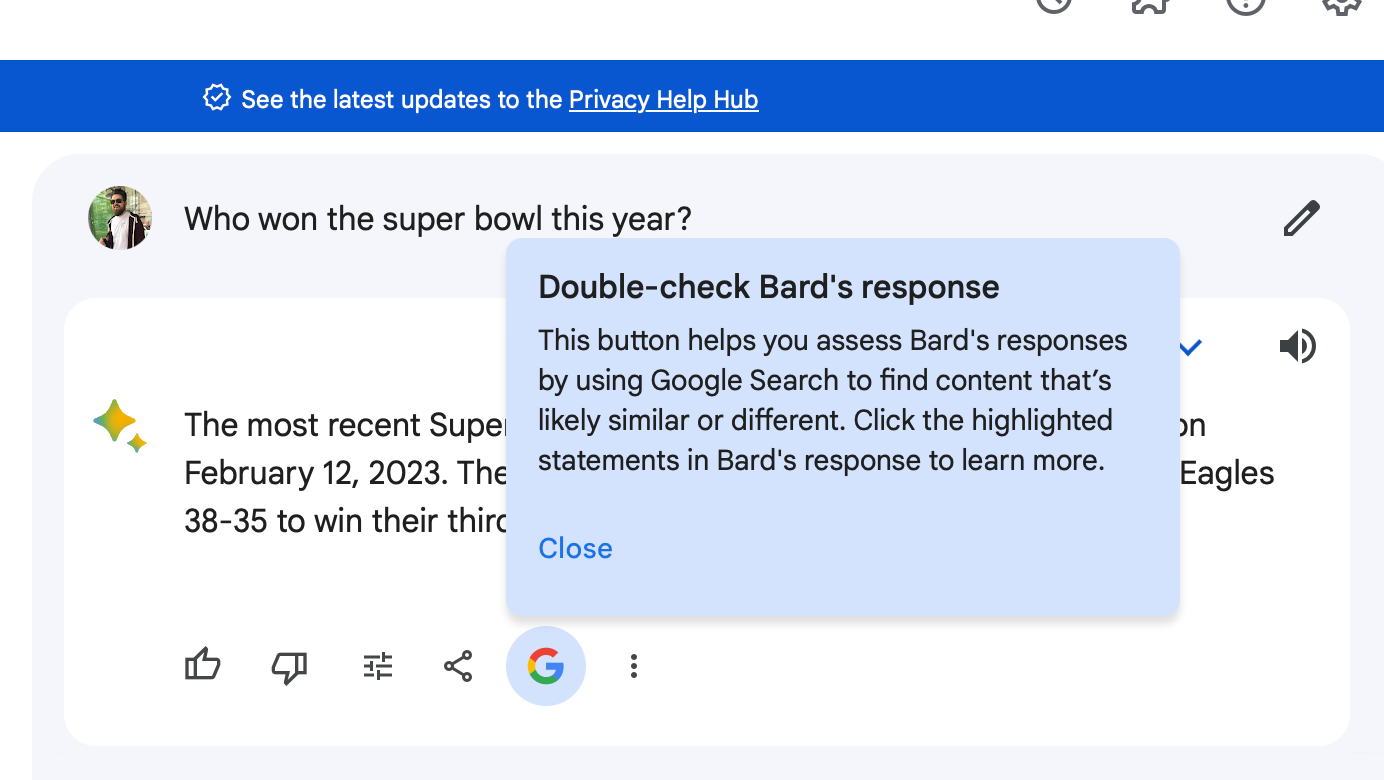

Some AI Tools Cite Their Claims

Bing AI makes a point of sourcing many of its claims with in-line links whenever you ask it a question. On top of this, it uses the GPT-4 model which is more advanced and uses more recent information than the free version of GPT-3.5 on OpenAI’s website.

It’s also built right into the Bing search engine, so it’s easy to quickly check sources by performing a standard web search. Google Bard is another chatbot that claims to show its sources using links, though in our tests none of the questions we asked revealed any sources.

If you’re using the paid ChatGPT Plus subscription, you’ll also have access to GPT-4 and, likewise, a live internet connection to source and display where information came from.

Even if sources are cited, you should investigate them if you’re using such a tool to come to a decision. The same can be said of the snippet that appears at the top of a Google search when you ask the search engine a question.

Be Mindful When Using AI Chatbots

AI chatbots like ChatGPT are exciting tools, and we encourage you to experiment with them to see what is possible. They can help you come up with ideas for projects or writing prompts, practice speaking a foreign language , draft CVs and formal letters , or even create your own personal AI assistant using custom instructions .

But due to their very nature, and the nature of the internet that we have created, they should be subject to a healthy level of skepticism. This is especially true when they won’t even tell you what sources they’re using for their claims.

For the really important stuff like medical queries, fact-checking for school projects, or making decisions that could affect the direction of your life, take more time and seek out opinions that you can trust using websites sourced through search engines, books, and other authoritative sources.

Perhaps let ChatGPT take control of what’s for dinner tonight instead?

Also read:

- [New] 2024 Approved Amplify Audience Connection Best Creative Reacting Techniques

- [New] 2024 Approved Discovering the Magic Behind Youtube Shorts

- [New] Crafting Captivating TikTok Screenshots with Ease for 2024

- [New] Cultivating Eco-Diversity in Metropolitan Land Use for 2024

- [New] In 2024, Dive Into Design Get a Complimentary Set of 50 Banner Pieces

- [New] No More Hidden Shorts, Just Visible Ones

- [New] Revamped Podcast Chats Attracting True Fans

- [New] Video Sharing Giants Clash Vimeo vs YouTube

- [Updated] In 2024, Best Recording Equipment For Creating YouTube Masterpieces

- [Updated] In 2024, From Abrupt Shifts to Serene Journeys Expert Crossfade Guidance with Audacity

- Hand-Drawn Whiteboard Animation Top Tools and Software for 2024

- How to Transfer Contacts from Itel P55+ to Outlook | Dr.fone

- In 2024, How to Share/Fake Location on WhatsApp for Poco M6 5G | Dr.fone

- In 2024, Youtube Growth Strategy Attracting Million-Strong Fans

- Insta-Collage Creation: Tips for Engaging Online Portfolits

- Title: Limitations of ChatGPT Compared to Traditional Web Search Techniques

- Author: Jeffrey

- Created at : 2024-11-25 17:59:04

- Updated at : 2024-11-28 17:36:45

- Link: https://eaxpv-info.techidaily.com/limitations-of-chatgpt-compared-to-traditional-web-search-techniques/

- License: This work is licensed under CC BY-NC-SA 4.0.